Today, data is more important than ever to companies. Using the right tools and skills, the data can help companies make a lot of money by knowing their customers better and making their products fit exactly to them. Saving the data is important and can be mission-critical for companies as well. Some data is stored in an objective manner in Storage like S3 Buckets, and other data is stored in Databases.

The Challenge

The challenge had to do with Backups for our Database. According to a security concern, backups of our data needed to be saved in a private, different location than the main account resources and be kept safely. These backups need to be automated using a cron expression so that no human action is needed, and the backups need to remain highly available for the entire retention time.

Prerequisites for this Solution

Have a different account for storing the backups with the trust relationship between the accounts (using AWS Organizations or any other way you'll like).

The Solution

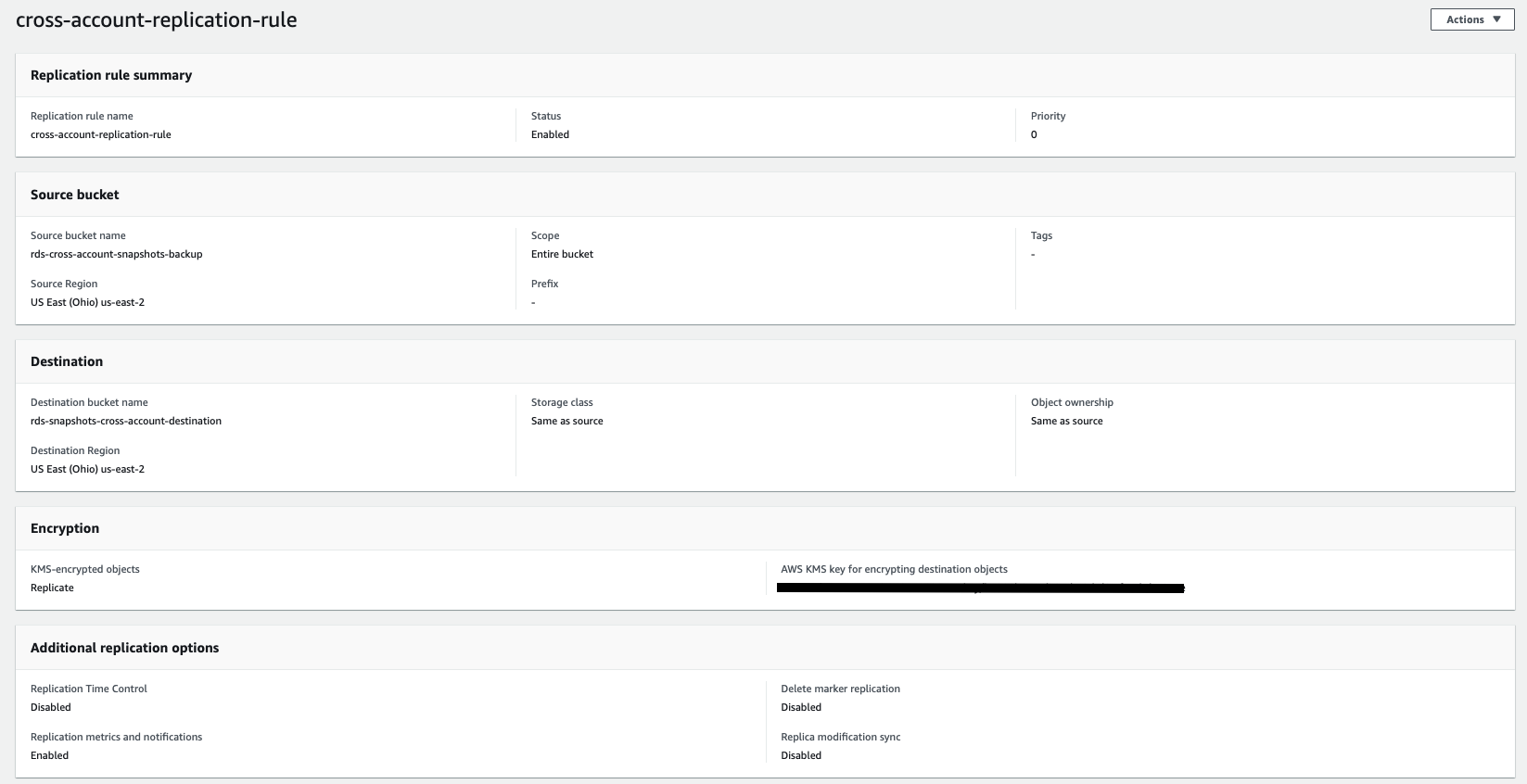

Using AWS Backup, we defined a vault and a backup plan to back up the RDS daily to the Vault. The backups are encrypted using an AWS KMS key and stored for 7 days. When a backup is completed, a CloudWatch Event is triggered, and it calls a Lambda function that triggers an Export to S3 operation for that snapshot. The S3 Bucket is encrypted at rest and has a replication rule to replicate the data to a second AWS Account's S3 Bucket using the same Encryption Key.

AWS Backup Rule:

Backup rule name

daily-backup

Frequency

Daily

At 05:00 AM UTC

Start within

8 hours

Complete within

7 days

Lifecycle

Never transition to cold storage

Expire after 1 week

Backup vault

pdq-backup-vault

Tags added to recovery points

–

Event Configuration:

{

"source": [

"aws.backup"

],

"detail-type": [

"Backup Job State Change"

],

"detail": {

"backupVaultName": [

"pdq-backup-vault"

],

"state": [

"COMPLETED"

]

}

}

Lambda

Lambda Configuration

{

"Configuration": {

"FunctionName": "copyBackupSnapshot",

"FunctionArn": "arn:aws:lambda:us-east-2:123456789:function:copyBackupSnapshot",

"Runtime": "python3.8",

"Role": "arn:aws:iam::123456789:role/service-role/copyBackupSnapshot-role-{hash}",

"Handler": "lambda_function.lambda_handler",

"CodeSize": 557,

"Description": "Used to copy snapshots from AWS backup to an S3 cross account replication bucket",

"Timeout": 3,

"MemorySize": 128,

"LastModified": "2021-08-16T12:25:26.595+0000",

"CodeSha256": "hash,

"Version": "$LATEST",

"TracingConfig": {

"Mode": "PassThrough"

},

"RevisionId": "id",

"State": "Active",

"LastUpdateStatus": "Successful",

"PackageType": "Zip"

}

}

Lamda Code

import json

import boto3

import time

from datetime import datetime

CMK_ARN = "kms-arn"

now = datetime.now()

currentTime = now.strftime("%d-%m-%Y-%H-%M-%S")

def lambda_handler(event, context):

client = boto3.client('rds')

snapshotArn = event["resources"][0]

response = client.start_export_task(

ExportTaskIdentifier="backup" + currentTime,

SourceArn=snapshotArn,

S3BucketName='rds-cross-account-snapshots-backup',

IamRoleArn='role-that-allows-lambda-to-write-to-s3,

KmsKeyId=CMK_ARN,

)

return {

'statusCode': 200,

'body': response["Status"]

}

Lambda Role

The lambda Role must have the following policy to use the KMS Key:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowUseOfCMKInAccount111122223333",

"Effect": "Allow",

"Action": [

"kms:Encrypt",

"kms:Decrypt",

"kms:ReEncrypt*",

"kms:GenerateDataKey*",

"kms:DescribeKey"

],

"Resource": [

"arn:aws:kms:us-east-2:123456789:key/key_Id"

]

}

]

}

Write-to-S3-Role

The role the lambda will invoke when dumping the backup to S3 will need this policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:ListStorageLensConfigurations",

"s3:ListAccessPointsForObjectLambda",

"s3:GetAccessPoint",

"s3:PutAccountPublicAccessBlock",

"s3:GetAccountPublicAccessBlock",

"s3:ListAllMyBuckets",

"s3:ListAccessPoints",

"s3:ListJobs",

"s3:PutStorageLensConfiguration",

"s3:CreateJob"

],

"Resource": "*"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": "s3:*",

"Resource": [

"arn:aws:s3:::*/*",

"arn:aws:s3:::rds-cross-account-snapshots-backup"

]

}

]

}

Shared Account KMS Key Conf:

{

"Id": "key-consolepolicy-3",

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Enable IAM User Permissions",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::123456789:root"

},

"Action": "kms:*",

"Resource": "*"

},

{

"Sid": "Allow access for Key Administrators",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::123456789:user/name"

]

},

"Action": [

"kms:Create*",

"kms:Describe*",

"kms:Enable*",

"kms:List*",

"kms:Put*",

"kms:Update*",

"kms:Revoke*",

"kms:Disable*",

"kms:Get*",

"kms:Delete*",

"kms:TagResource",

"kms:UntagResource",

"kms:ScheduleKeyDeletion",

"kms:CancelKeyDeletion"

],

"Resource": "*"

},

{

"Sid": "Allow use of the key",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::123456789:user/tomere",

"arn:aws:iam::123456789:role/service-role/copyBackupSnapshot-role-{hash}",

"arn:aws:iam::123456789:role/service-role/s3crr_role_for_rds-cross-account-snapshots-backup",

"arn:aws:iam::123456789:role/service-role/AWSBackupDefaultServiceRole",

"arn:aws:iam::123456789:role/Admin__SSO",

"arn:aws:iam::987654321:root"

]

},

"Action": [

"kms:Encrypt",

"kms:Decrypt",

"kms:ReEncrypt*",

"kms:GenerateDataKey*",

"kms:DescribeKey"

],

"Resource": "*"

},

{

"Sid": "Allow attachment of persistent resources",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::123456789:role/service-role/copyBackupSnapshot-role-{hash}",

"arn:aws:iam::123456789:role/service-role/s3crr_role_for_rds-cross-account-snapshots-backup",

"arn:aws:iam::123456789:role/service-role/AWSBackupDefaultServiceRole",

"arn:aws:iam::123456789:role/Admin__SSO",

"arn:aws:iam::987654321:root"

]

},

"Action": [

"kms:CreateGrant",

"kms:ListGrants",

"kms:RevokeGrant"

],

"Resource": "*",

"Condition": {

"Bool": {

"kms:GrantIsForAWSResource": "true"

}

}

}

]

}

S3 Configuration

AWS Key Management System allows you to create, change and manage encryption keys inside your AWS account.

I used CMK (customer-managed key) in order to encrypt the daily snapshots and shared this key with both accounts using a combination of key policy and IAM policy.

In that way, you can manage and validate your encryption and also make sure that both accounts can use this custom key.

Lifecycle rules in S3 are a feature that allows you to determine what will happen to objects that are inserted into an S3 bucket after pre-configured time.

Objects can be moved to a different storage class or to be deleted.

This feature allowed me to configure retention of 7 days to the snapshot that is being exported to S3 so we wouldn't have too many snapshots and we can reduce the cost of this backup bucket.